The Future of Education: 8 Trends to Watch in 2024

Following a year of change across the education industry, here are 8 big education trends to watch in 2024.

6 Ways AI is Already Revolutionizing Education

Here’s how a philanthropic initiative at Salesforce is closing the AI access gap by providing flexible funding, pro bono expertise, and technology to organizations.

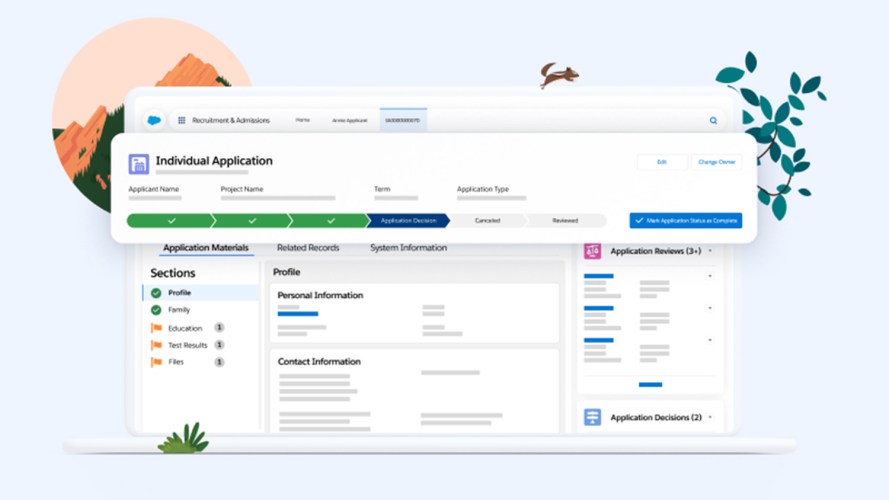

Salesforce’s Reimagined Education Cloud: Building Learner Relationships for Life

The education industry is navigating global uncertainties and education-specific issues such as the need to improve enrollment and graduation rates, develop new strategies for staff recruitment and retention, and deliver on higher student…

Athleteforce: From Elite Careers in Sports to Elite Careers in Technology

Nelson Mandela once said, “sport has the power to change the world. It has the power to unite people in a way that little else does.” Whether it provides a safe place for…

Announcing the Third Edition of the Connected Student Report

Earlier this year, you got a sneak peek into the early findings from the third edition of the Connected Student Report. Now you can see ALL the findings, as the latest edition is…

Announcing the Community Impact Report 2022

Today, we’re excited to announce the release of the Community Impact Report 2022. In our fifth annual report, we highlight how our customers are using the resources provided by Salesforce.org and our partners…

6 Reasons to Attend the 20th Annual Dreamforce This September

Have you heard the news? Dreamforce 2022 — primed to be our most impactful Dreamforce ever — will return to San Francisco from September 20 through September 22, bringing the entire Salesforce community…

How Salesforce and HBCUs are Partnering with Purpose

Historically Black Colleges and Universities (HBCUs) are more than just higher education institutions. They are sources of accessibility, equity, opportunity, and innovation. Moreover, they say people make a place, and that is certainly…

The Importance of a CRM Vision and Strategy

This post was originally published on 7/11/2016 and updated on 8/12/2022. While the need for a constituent relationship management (CRM) system and an overall CRM vision has become clear, many organizations have struggled…

What is a CRM for Nonprofits and Education Institutions?

A CRM is customer relationship management technology that helps nonprofits, educational institutions, and businesses manage their relationships with current and prospective constituents, students, and customers. A CRM gives organizations the tools to streamline…